From the very beginning, NASA has understood that space photography plays a vital role in its missions. By releasing images of a lonely blue planet or footage of astronauts in space, they elegantly communicate their achievements to the public and inspire future engineers and scientists.

Over time, the photography technique evolved from pointing the camera from a window to using powerful mirrors and telescopes to photograph distant objects. In order to share these images with the world, NASA had to overcome challenges ranging from imaging technology itself to data storage and transfer. From the original image of the Pale Blue Dot to new shots from the James Webb Space Telescope, the visual image of space exploration has always relied on data.

Ultraviolet, infrared, and radio waves—how do they work?

Space is a changing place, full of harsh conditions and unpredictable factors. Many techniques are needed to photograph such a changing object. For example, large areas of the Milky Way galaxy are not discernible in the visible light spectrum because they are hidden by cosmic dust. Most attempts to capture these celestial bodies have failed, but radio waves do pass through cosmic dust.

Discoveries like radio wave imaging underlie many of the most common techniques used in space photography. Ultraviolet photography allows the detection of extragalactic planets and stars by creating two-dimensional images of ultraviolet radiation. To obtain images at the other extreme of the light spectrum, infrared cameras focus on electrons emitting infrared radiation. And photography creates an images by scanning space, assigning image data to each pixel to create a mosaic of space.

These methods require a huge amount of data to create a single photo. The image of the center of the Milky Way, obtained by the MeerKAT radio telescope in South Africa, is a composite of 20 separate radio observations. It took 70 terabytes of radio wave imaging data and three years to process one image to create the 1,000-by-600-light-year panorama.

Back and forth

70 terabytes is a lot of data, and that’s just for one image. Current missions are constantly generating such data, sometimes up to 100 terabytes per day. Storing data during spaceflight and then transmitting it back to Earth for processing are key processes in space photography.

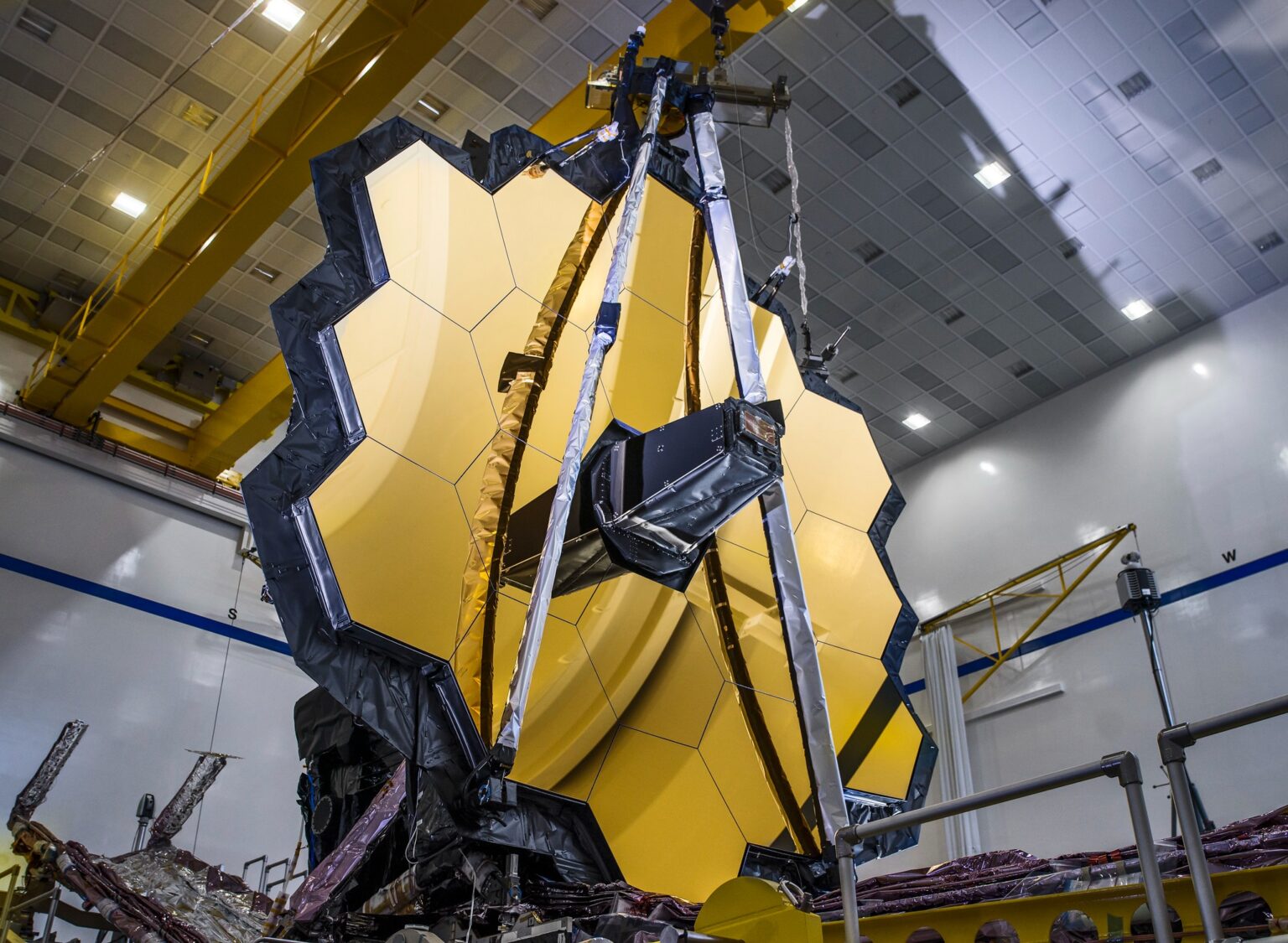

Data from satellites and cameras are collected and stored on board spacecraft. In an article for VentureBeat, the marketing director of the IoT segment of Western Digital Yaniv Yarovychi, talked about the unique challenges of data storage in space. Yarovychi emphasizes the importance of data reliability and integrity in such cases. A mission like the James Webb Space Telescope costs so much money, time and effort that it would be a shame to lose the collected data due to a failure of a disk drive drifting somewhere in space.

Also, because these missions capture some images that humanity may never be able to reproduce, it is critical that the data is usable upon return to Earth.

Data protection

This level of performance must withstand the stresses of rocket launch, atmospheric exit, harsh space temperatures, and galactic cosmic rays. According to Yarovychi these goals and obstacles are at the heart of an approach known as reliability design. Therefore, NASA together with Western Digital created a new standard called Design for Reliability, which is implemented in data storage devices for the reliable protection of information.

Once the data is securely stored, various space missions await its transmission back to Earth via radio waves. But even here, reliability and integrity are important factors. Mission control centers carefully monitor the speed and volume of data transfers to ensure their integrity. This is an exercise in balance: the data must not exceed the storage capacity of the satellite and at the same time prevent loss during transmission. It is worth noting that the capacity of the built-in solid-state storage on board James Webb is only 68 GB.

To visualize and share this painstaking work, NASA created Deep Space Network Now as part of NASA’s Eye program. Deep Space Network Now allows the public to see real-time communications and data transmission with various ongoing NASA missions by displaying the dashboards of the three main communications antennas in Spain, Australia and the USA. Distance, travel time, bitrate and other data paint a vivid picture of a dangerous data journey.

Shoot for the stars

In addition to its business and scientific benefits, space imaging is critical for the dissemination of information about NASA’s fundamental objectives. While science can be difficult to appreciate for a lay person, due to complexity of technical language and mathematics, the pictures are universal. These images tell the story of where we look and what we see. This is the story of the hard work of countless engineers and scientists.

The images captured by JWST and other spacecraft inspire people today to keep moving forward, to keep imagining and exploring. This inspiration is the foundation of many scientific careers, whether as an astrophysicist or an environmentalist. They may not land in space, but these people still land among the science stars.

We previously reported how James Webb captured the most distant star in the universe.

According to The New York Times