Communication with artificial intelligence has become available to all Internet users in recent months. Neural networks generate answers to complex questions, write poems and draw pictures. Some forebode that humanity’s journey in the study of this universe is over and we will be replaced by intelligent machines. But in order to understand whether this is so, it is worth remembering where this story began.

The greatest scientific mysteries

“Two things fill the mind with ever new and increasing admiration and awe, the more often and steadily we reflect upon them: the starry heavens above me and the moral law within me,” the outstanding German philosopher Immanuel Kant once proclaimed. These words immaculately characterize two branches of science, which are the greatest test for modernity and promise us incredible miracles.

The first of them is space exploration and development. The second is the study of human intelligence and the creation of its artificial analogues. And while our path to the stars promises to be long and tedious, we can entertain ourselves communicating with artificial intelligence right now.

People who work with Chat GPT and Midjorney are amazed by the texts and images that the AI produces. Many draw apocalyptic scenarios that tomorrow artificial intelligence will begin to replace us in all the roles which we considered as exclusively ours — for we are used to seeing ourselves as the only crown of evolution.

It can already be said that artificial intelligence will fly to the stars, and the only question is whether humans will accompany it on this journey. At the same time it is quite possible that we overestimate the capabilities of our machines.

Turing test

When we say that we are amazed that the result of the work of artificial intelligence is indistinguishable from the activity of a human, we are in fact certifying that it has passed the Turing test. It is mostly this concept that our understanding of artificial intelligence is based on.

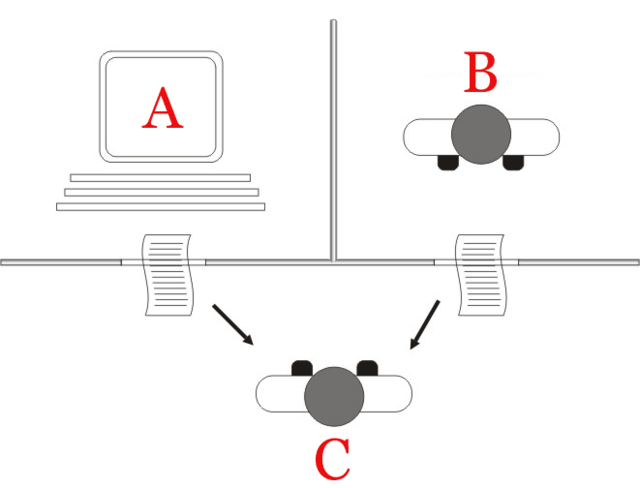

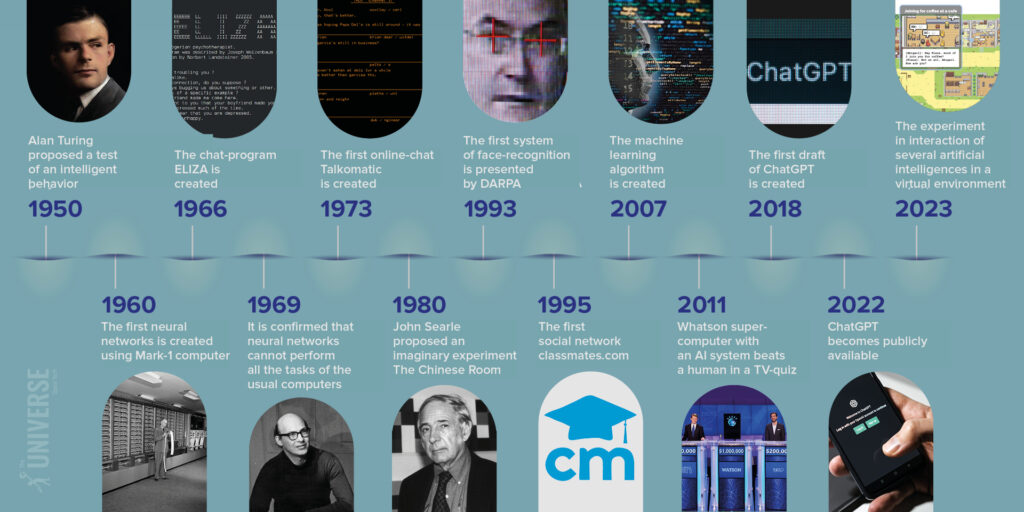

The original test, invented in 1950 by the outstanding British mathematician Alan Turing, was as follows. A human experimenter exchanges written messages with two interlocutors (a human and a machine). Answers are received at equal intervals. If in the process of communication the experimenter cannot tell which of the messages were written by the machine, then the test is considered to be passed.

Turing knew what he was doing. He was the greatest theoretician and practitioner of computer technology of his time. And to this day, at the level of concept, all our computers work as he saw it. Moreover, it was this scientist who created a machine able to solve an intellectual problem insurmountable for human mind: deciphering an extremely complex enemy cipher.

But Turing actually cheated. In his time, the question of what globally happens in the human brain remained the same dark forest as it was in Kant’s time a century before. Therefore, the mathematician chose a practical approach: if one consciously intelligent system recognizes another as intelligent, then the latter really is.

Linguistic models and the Chinese room

When mentioning Alan Turing’s test, one often forgets the important fact that he never communicated with any computer in the manner described. And he hasn’t actually seen any such a chat. We can only guess how computers would have developed had Turing not died untimely.

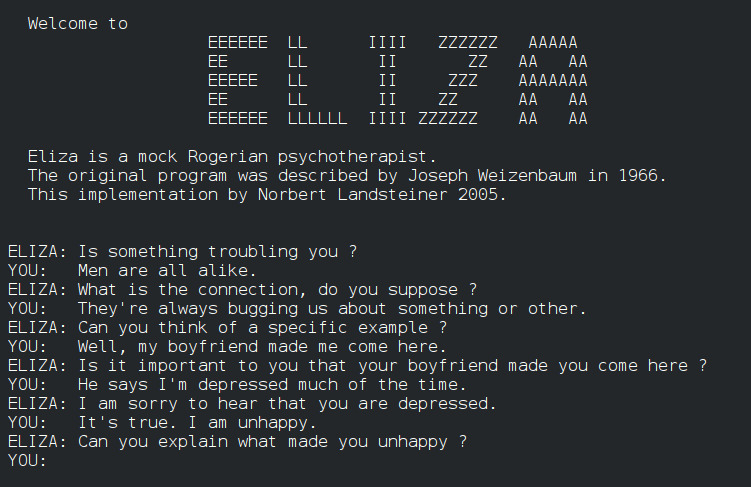

Anyway, people managed to enter into a dialogue with a machine only 10 years after his death in the mid-1960s. The program was called ELIZA. It successfully rearranged the words in the question itself and responded to some key words in it. A person who was really eager to talk to someone could easily forget that it was a machine, but any sane interlocutor would easily understand that the conversation is senseless and leads nowhere.

ELIZA did not understand the meaning of the words. She was based on the fact that human language has a certain structure, and certain words in it line up in a certain way. Thus we have a fairly complex, but still purely mathematical pattern.

Anyway, by analyzing the statistical distribution of words in a certain volume of texts, you can achieve a much more convincing juggling of words than ELIZA did. Such systems are called linguistic models, and all modern chatbots including ChatGPT itself are based on this same principle.

The latter essentially Googles the most statistically fit word for its answer. That is why it seems so natural. This is exactly the set of words that a person who knows something about the topic of the question could use in the answer.

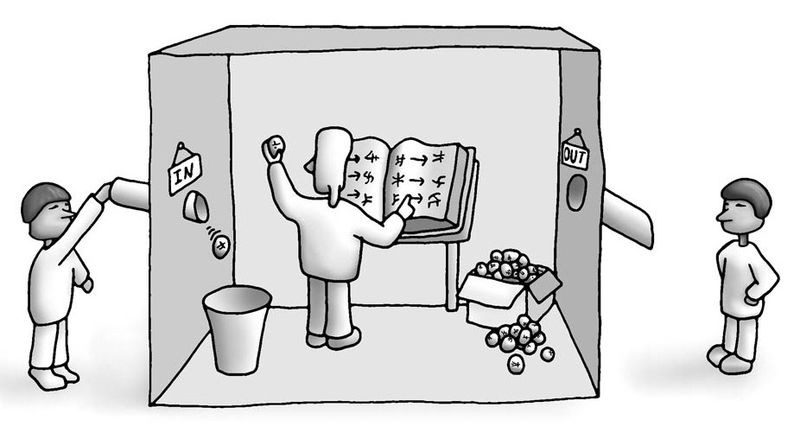

ChatGPT passes the Turing test, but that doesn’t mean much. Back in 1980, the American philosopher John Searle published a work in which he staged an imaginary experiment called The Chinese Room. Let’s put a person who does not know Chinese into a closed room. Then we give him instructions on how to arrange hieroglyphs correctly, having received some combinations of them.

A person in the room will give out of his own texts that native Chinese speakers can read and interpret as a meaningful response. At the same time, the roomed person himself will have no idea what this dialogue is about.

And it’s really very similar to how ChatGPT works. Therefore, many researchers rightly point out that no matter how much its statistical mechanisms for selecting the correct words on the Internet are improved, it still remains a Chinese room that does not even aware that the symbols constituting plausible answers actually have a meaning.

Neural networks as artificial intelligence

While some scientists were playing with linguistic models, others managed to understand a bit about the mechanics of human brain. As for the question of how feelings arise, everything still appears quite vague, but it became clear that nothing in a human head works by the Turing’s principles used as the basis to create our computers.

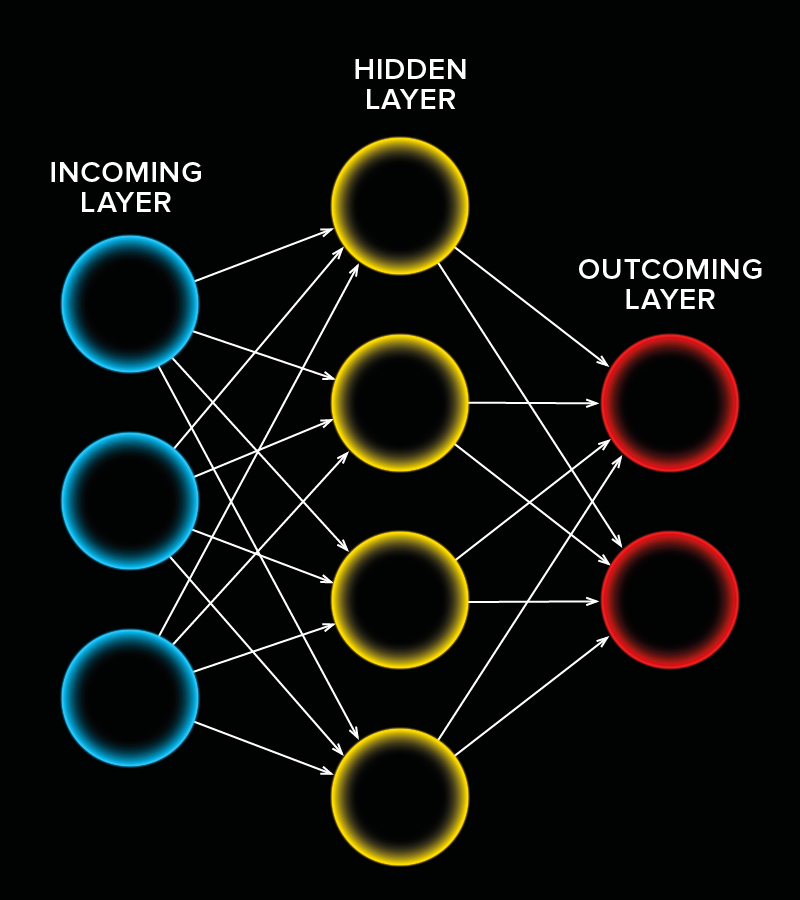

Instead of running programs in our heads, no matter how complex and extensive, we run information through a network of neurons, each of which has specific settings to interact with that information, and all of them are working together as a machine for recognizing patterns and situations based on previous experience

It is easiest to imagine it as a set of sieves through which balls of different sizes and colors fall. The task is to sort them and distribute them into different boxes at the bottom. Imagine being able to change the position and caliber of the sieves, and do other tricks to always sort the balls correctly, regardless of the patterns of their falling.

The simplest model of a neural network. Source: Wikipedia

The first artificial neural networks appeared in the late 1950s. Scientists experimented with them until the end of the 1960s, until they found out that they either they were utterly unable to perform the main tasks facing computing at that time, or performed them much worse than computers of traditional architecture.

Neural networks were put in storage for 30 long years, until in the 1990s it became clear that some of the new problems facing the world-wide computer network could not be solved by traditional computers, no matter how much power was added to them.

Then neural networks were taken out of the drawer, and for almost three decades now they have been the source of all new achievements by AI. They are the ones who learned to recognize people by faces, to win chess and Starcraft competitions with human champions, etc. Astronomers also widely use them to recognize objects of certain classes in images or to find the most appropriate physical models.

ChatGPT also contains a neural network. And theoretically, it could learn anything, but the developers trained it just by Googling words and, in fact, the machine is not capable of anything else. And this is what makes it doubtful that one day AI will be able to replace us.

What is intelligence?

All the neural networks that people have trained in the last 20 years are highly specialized. This means that they know how to find certain images in a lot of information, but only that, and if they are deliberately given some false data set for analysis, they still try to find some “correct” answer.

It’s akin to children seeing animals and people in the clouds floating above them. If a neural network trained to recognize human faces is given images of galaxies to process, it will pick out those that look like eyes and noses, even if it’s just a superimposition of completely different objects.

Highly specialized artificial neural networks reveal signs of intelligence only in the context of the tasks for which they were trained. It’s not that they simply can’t understand other tasks — they don’t even know those tasks exist.

Machines can perform complex intellectual tasks better than humans, but we still cannot call them intelligent, for we are able to come up with our own test, different from the one invented by Alan Turing, insurmountable for a machine.

Boston Dynamycs robots are also capable of many things

And all this is because human intelligence is not only the ability to put words in the correct order or to recognize a certain pattern in an image — it is all of these together and a bunch of other skills.

We have learned a lot about how our brain works, but we still cannot give a definition of natural intelligence that would cover all the phenomena related to it and satisfy the same Kant in addition.

What does artificial intelligence lack to take over the world?

Experiments with linguistic models and highly specialized neural networks cannot be deemed completely meaningless. Thanks to them, we understood what AI lacks in order to become completely similar to us.

First and foremost is a much broader context. The natural human neural network evolved in the real world, where the main task was to use senses to survive and navigate in a space cluttered with a huge number of objects. Social tasks — not to mention the operation of complex symbolic systems — are only a superstructure on top of all this.

And an artificial intelligence is primarily used to work with symbols, mathematical models and images. The robots, presented to the public time to time, mostly remain expensive toys of varying degrees of clumsiness. And they are especially uncomfortable in unstructured environments, where too many factors need to be taken into account and remembered.

In fact, a memory that stores a huge number of different little things is another item that artificial intelligence is sorely lacking at the moment. AI can perfectly simulate communication, but it does not have the context grounded on its own experience.

If we create a system that forms its own memory structure, and let out into the world, it is quite possible that it will eventually start asking the same questions that bother us. And then we should worry about the answers found by it.

Social interaction and artificial intelligence

Another aspect, treated cautiously by artificial intelligence developers as for now, is social. Man exists in society. Our intelligence is manifested in communication with others. So we can suppose that artificial intelligence being in utter isolation simply will not be able to understand that there are some people out there that can offend it, so they deserve to be destroyed.

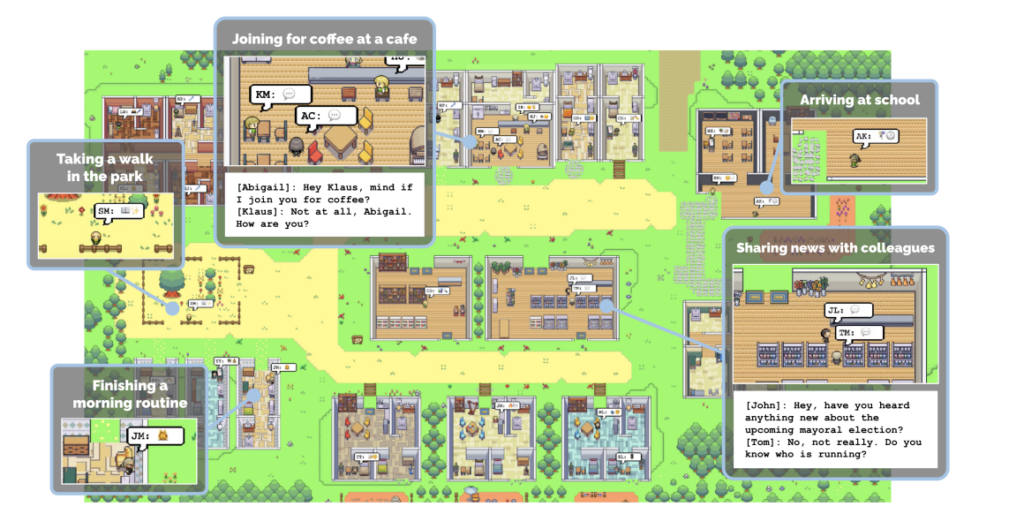

In April 2023, a paper was published describing an experiment by scientists from Stanford University regarding the interaction of several artificial intelligences in an environment created for them. Scientists created 25 virtual personalities based on ChatGPT, added memory blocks to them, which they could fill with events, a system of summarizing experience and planning, and sent them to walk through a drawn village.

The environment was as simple as possible, in it you don’t need to calculate the dynamics of your steps so as not to stumble over a brick on the road. But it was detailed enough to reproduce all the processes that take place in small settlements.

And AI, without the help of people, extremely realistically recreated the dialogues that take place in such cases. So, as of today, this particular experiment can be considered one of the most successful attempts to bring a machine closer to a person.

Do artificial intelligences dream of the stars?

At this point, it is worth turning to the Turing test again. The British mathematician proposed it, supposing that we have some ability to reliably recognize another person by the language he or she uses. In other words, having cast a glimpse at lines or actions of a counterpart, we can allegedly discern whether they make sense or not.

Turing died long before the first social networks appeared, and he had not a chance to see how people’s communications there differ from this ideal. And modern scientists have known since the time of ELIZA that people’s ability to discern meaning out there strongly depends on their own willingness to see any meaning at all. Bearers of natural intelligence too easily see meaning where it is absent, and fail to see it where it is critically important.

If we continue the Stanford experiment, increase the complexity of the environment, the number of artificial intelligences in it, and give them time to accumulate experience, how quickly will our empathy with them get comparable to our empathy with the inhabitants of some real town on the other side of the globe?

You can, of course, put a block in the form of some complex idea. For example, an artificial intelligence capable of independently formulating Kant’s opinion about the starry sky and the moral law can be considered equal to us. But then we have to admit that most people are not intelligent at all, for they do not even care about stars.

Of course, you can say that it doesn’t matter whether the AI ever dreams of the stars or not, as long as it does its job. As for people, after all, their actions interest us much more often than their dreams and visions. We just should be aware that, if AI really turns out to be similar to us, then its actions will be determined not by our opinions about it, but by its own sources of sacred inspiration.